Data Storage for AI

Capture the value of artificial intelligence with high-capacity, high-performance data storage across the

six stages of the AI Data Cycle.

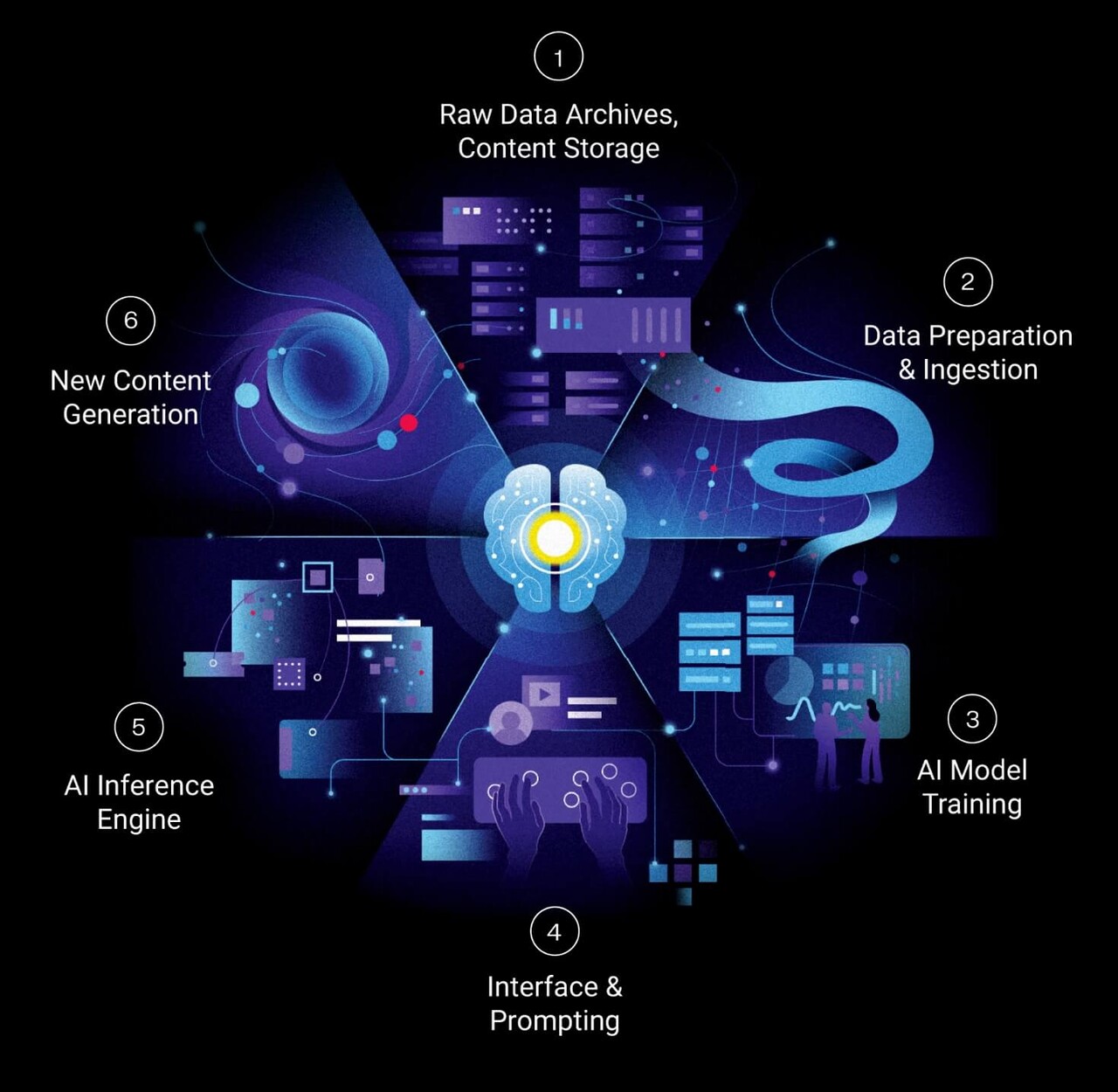

Introducing the AI Data Cycle Framework

AI models operate in a self-perpetuating, continuous loop of data consumption and generation. As AI grows and evolves, it creates even more data across six distinct stages — an AI Data Cycle with specific storage requirements at every stage.

1

Raw data archives and content storage

2

Data preparation and ingestion

3

AI model training

4

Interface and prompting

5

AI inference engine

6

New content generation

Optimize Data Storage Choices Across Your Own AI Data Cycle

High-capacity, high-performance, and compute-intensive enterprise-class HDDs to help you maximize performance and balance TCO throughout your AI architecture.

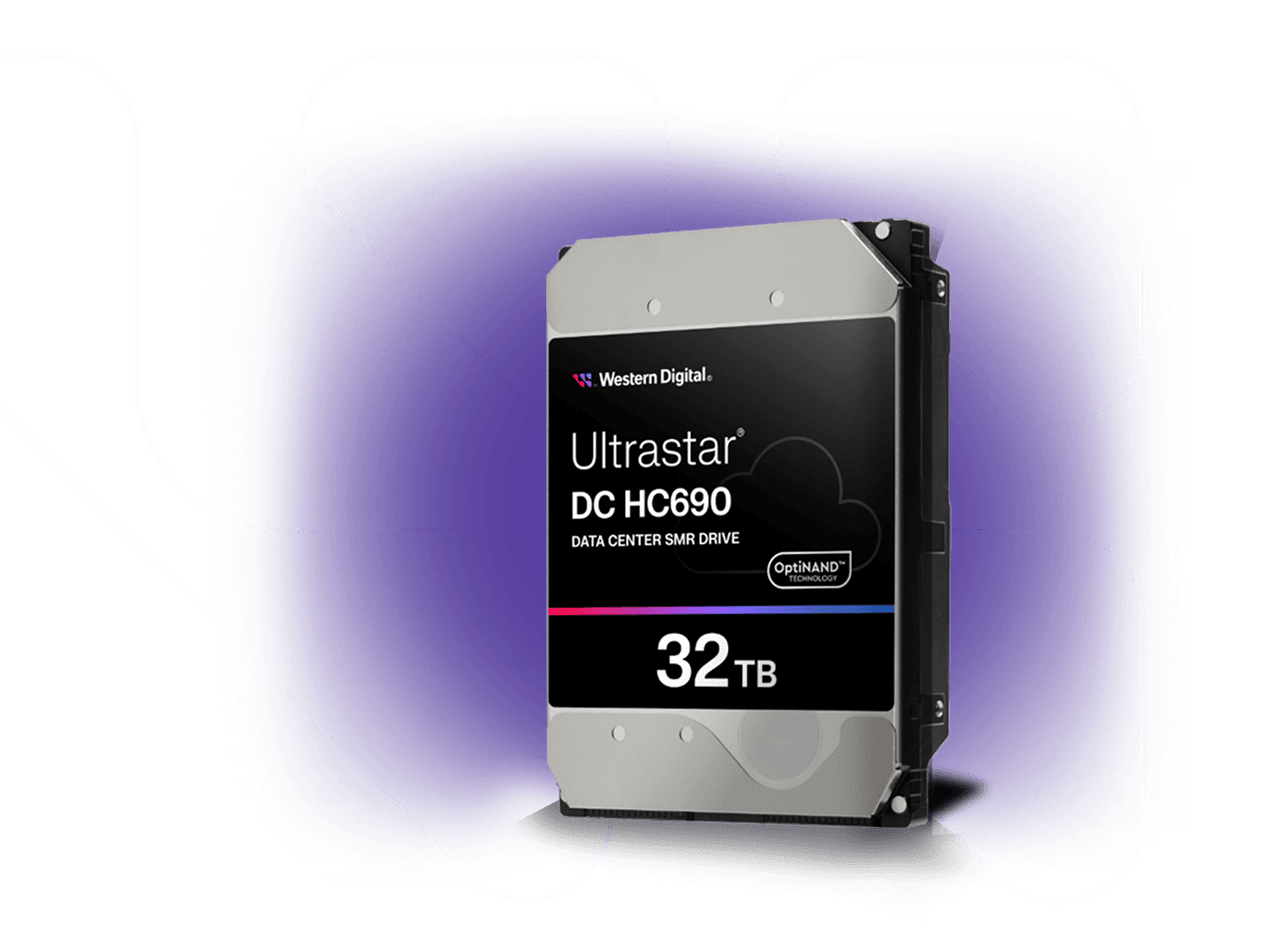

ULTRASTAR DC HC690 HDD

Archive Critical AI Content to Extract Future Value

TCO-optimized capacity up to 32TB1

Massive capacity purpose-built to handle rapidly expanding AI datasets at key points across the AI Data Cycle.

Built to keep TCO low

Designed to handle massive data sets and streamline preparation for training AI models and refining performance.

Ready for AI architecture

Ideal for massive data storage in hyperscale cloud and enterprise data centers with seamless qualification and integration for rapid deployment.

PRESS RELEASE

Western Digital Introduces New AI Data Cycle Framework to Help Customers Capture the Value of AI

Disclosures

1. 1GB = 1 billion bytes and 1TB = 1 trillion bytes. Actual user capacity may be less depending on operating environment and RAID configuration (if applicable).